- Tags

- Article

- Data

- Data Management

Data Lake or Data Swamp?

Big data has been around long enough now that pretty much everybody in the field can rattle off a list of tools used in the Big Data world. For example: Hadoop, NoSQL, Hortonworks, Spark, Pig, Hive, Cassandra, Cloudera, Storm, HBASE, and Data Lake just to name a few. One of them that caught my eye recently that never came up in my research on Big Data was Data Swamp.

One of the first things that went through my mind was “Wait, what? What is a Data Swamp?” Data Lake I totally got as my definition is “a massive dumping ground (pool) for your company’s data (in structured and unstructured formats) that makes it easily accessible by anybody to gleam and gather information from and process it as they see fit.” If you go to Google and search for “Data Lake Definition” you get a wide number of possible answers:

“A data lake is a storage repository that holds a vast amount of raw data in its native format until it is needed.” – techtarget

“Data Lake”: centrally managed repository using low cost technologies to land any and all data that might potentially be valuable for analysis and operationalizing that insight.”- O’Reilly

“The data lake dream is of a place with data-centered architecture, where silos are minimized, and processing happens with little friction in a scalable, distributed environment. Data itself is no longer restrained by initial schema decisions, and can be exploited more freely by the enterprise.” – Forbes

“A data lake, as opposed to a data warehouse, contains the mess of raw unstructured or multi-structured data that for the most part has unrecognized value for the firm. While traditional data warehouses will clean up and convert incoming data for specific analysis and applications, the raw data residing in lakes are still waiting for applications to discover ways to manufacture insights.” – Wall Street & Technology

“A data lake is a massive, easily accessible, centralized repository of large volumes of structured and unstructured data.” – Technopedia

And you cannot forget everyone’s go to for information…Wikipedia.

“A Data lake is a large storage repository that ‘holds data until it is needed’”

Interesting note on the Wikipedia page “PricewaterhouseCoopers says that data lakes could ‘put an end to data silos’". Maybe more to come on that in a future post….maybe.

And the list goes on…

Common themes among those quick definitions are large volume of data, unrestrained data structures, and lack of governance around the data (“until it is needed”).

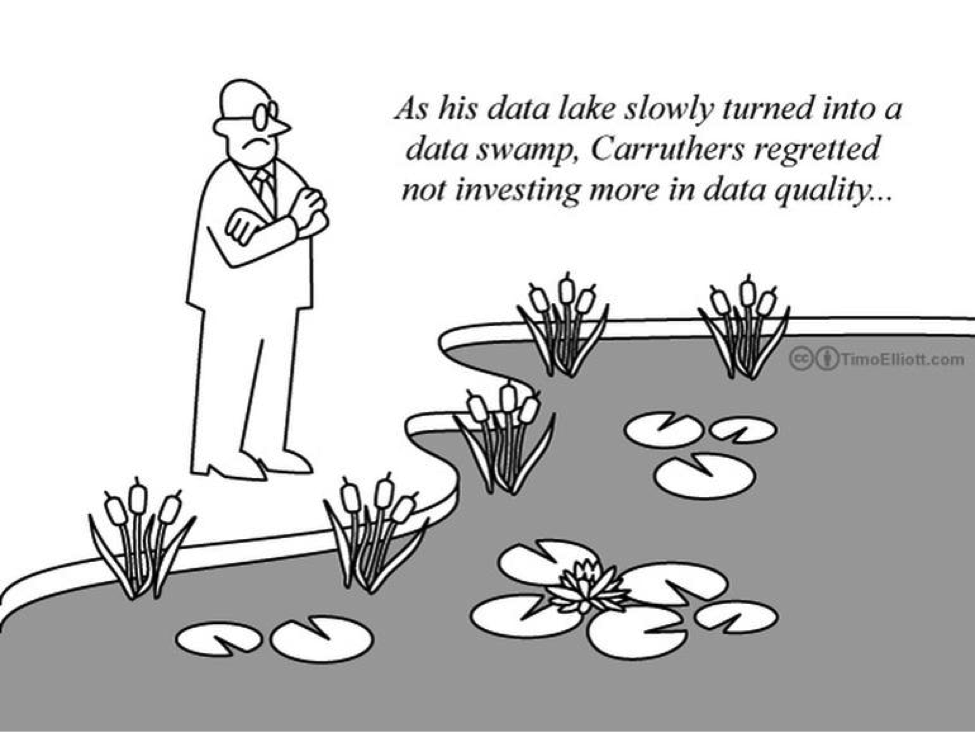

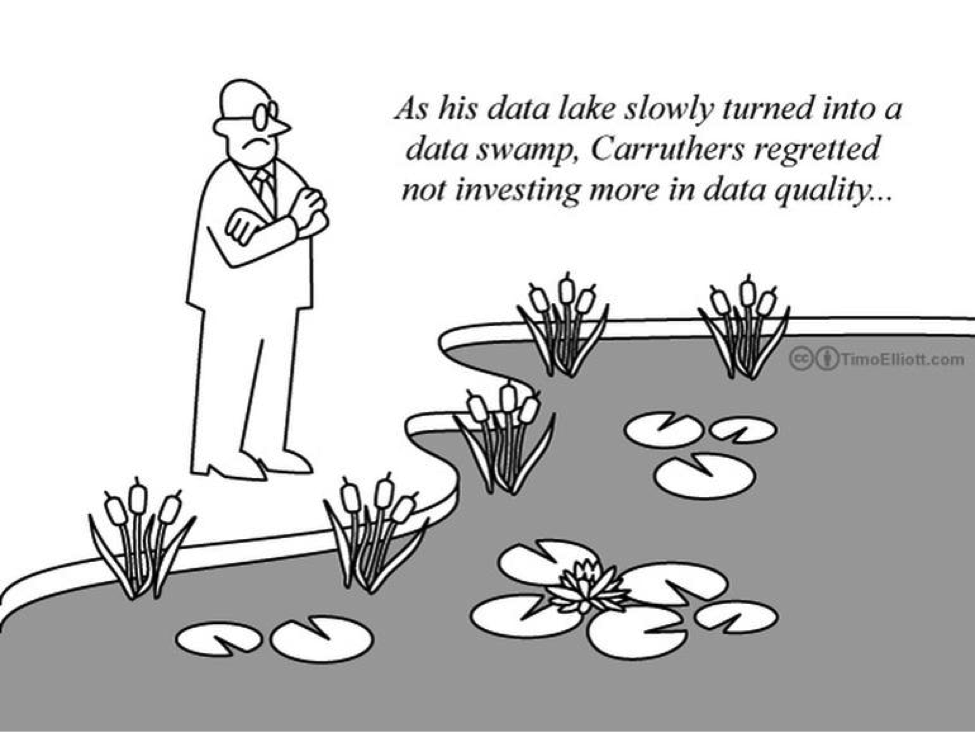

So now onto what a Data Swamp is. In my research on this I went back to Google and searched “Data Swamp Definition” and surprisingly enough, not a single definition came up by default. Plenty of links to articles about it but no big, bold box at the top with a definition. So what is a Data Swamp then?

My definition would be this “A Data Swamp is an unstructured, ungoverned, and out of control Data Lake where due to a lack of process, standards and governance, data is hard to find, hard to use and is consumed out of context.” It is hard to glean a real definition of what a Data Swamp is from some of those “Data Swamp Definition” links returned by Google as they just throw up the “Danger, Will Robinson, Danger” type message and move on or they try to sell you their magic software that will turn your swamp into reservoir.

So how do you avoid a Data Swamp? Unfortunately there is not a cookie cutter answer to this as everybody is utilizing their Data Lake differently based upon the type of data it is and how it is being utilized.

You need a vision for your Data Lake. Ask yourself these questions “What is the purpose of my Data Lake?”, “What sort of analytics am I wanting to do?”, “What data sources do I need?”. Plenty more questions you can ask but you get the idea. Don’t just start dumping data into the Data Lake and tell yourself you’ll figure it out later. Do it in advance as it will make life easier down the road. If you find yourself have multiple goals, one Data Lake may be the answer but don’t be afraid to silo the data into smaller Data Pools. The key here…think long term needs and not just immediate needs.

In Gartner’s article called “Beware the Data Lake Fallacy” they put up this approach: “Without descriptive metadata and a mechanism to maintain it, the data lake risks turning into a data swamp. And without metadata, every subsequent use of data means analysts start from scratch.” Having been a data guy for my whole consulting career, I have to agree. Without metadata around the data, you do not know the context, the format, the structure, etc. You need to know what your data is otherwise you are bound to spin your wheels over and over reanalyzing the data each time. By having your data properly analyzed, standardized and mastered, you have just minimized your amount of rework each time you want to utilize that particular data asset for whatever data analysis you are doing. Additional benefits though includes the reusability of the assets among users in the environment and across the organization as they can easily identify what the asset is based on naming convention, file structure, etc. Users will also be able to properly identify the granularity of the data (would hate to do an analysis and find out afterwards that not all your data was at the same grain). The same benefits of mastering your data in a traditional RDBMS are applicable in the Big Data space as well. I agree with Big Data, in particular, there is an element of data exploration to it, but you still need to know the data in order to have quality results.

Data Governance. Odds are if you’re reading this you probably already know what Data Governance is, but even in a Big Data environment you need to set up governance and security around your data. Unless you’re the system admin, you do not know who has access to what and even then you probably don’t know how they’re going to use it. By keeping security measures in place, you also help keep the Data Lake under control. Data governance could also be extended to my first point about controlling what comes into the Data Lake. And please, keep the governance up to date with proper processes and controls.

One last point about the magic software to control your Data Lake, I won’t say that they don’t have their benefits but I think there are some steps you can do internally first with your Big Data environment before you need to pay a company for their bolt on solution. So in summary, I leave you with this.